Joshua Edgcombe admits he didn’t know very much about image processing when he started learning about it a year ago.

It took about four months of learning the basics of how images can be processed, matched up and stitched together in a pipeline. A few more months were spent on learning about feature detection, and homography matrix calculations.

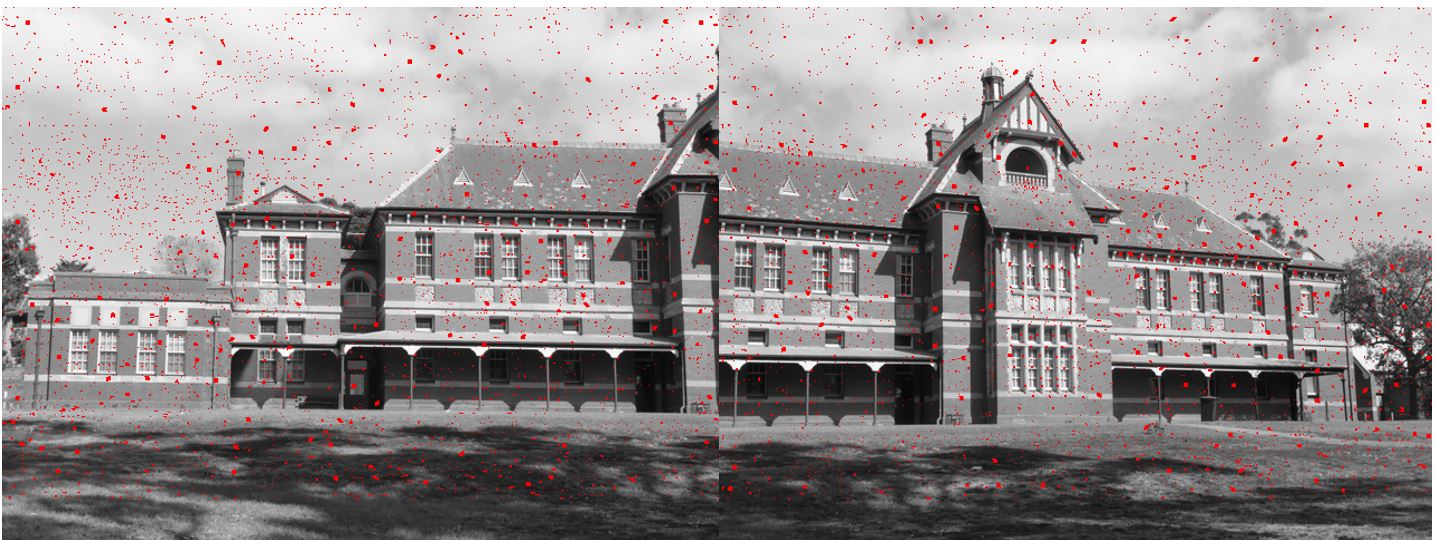

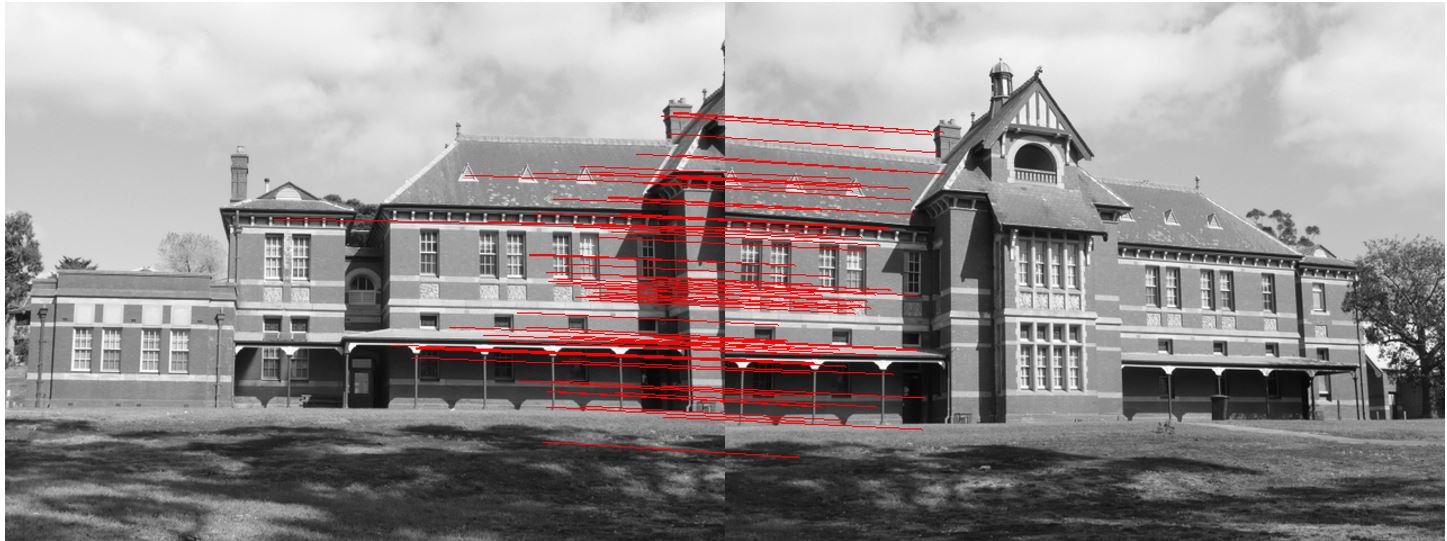

Edgcombe is comparing the resource utilization, power, and latency of an object detection algorithm accelerated on both an FPGA and a GPU for his thesis work at Grand Valley State University. He’s using a from scratch implementation of the SURF (Speeded Up Robust Features) algorithm, which traditionally operates by:

Edgcombe’s system follows this up with matching points for Image registration and mixing.

The SURF algorithm was first presented at the 2006 European Conference on Computer Vision. Its implications have since been discussed at length in textbooks, reference works and academic papers. As such, the foundational calculations necessary to perform the first step in detecting points of interest (i.e. of greatest contrast) are freely available. In addition to accelerating the SURF feature detection algorithm in FPGA programmable logic, Edgcombe’s VHDL code calculates the homography transformation matrix necessary for the fourth step, transforming each image as needed.

Having previously implemented a pipeline supporting two image sensors through an FPGA output over DisplayPort interface to a monitor, Edgcombe was not facing a steep learning curve when it came to designing this system. The greater challenge was in minimizing its footprint. Anything that wasn’t critical to the thesis, and therefore taking resources away from the accelerated feature detection algorithm, had to be scrapped.

Edgcombe expects to see lower latency with the FPGA when compared to the GPU, ” Because the FPGA can begin processing pixels as soon as they are received. GPUs, on the other hand, require the full image to be loaded into memory prior to processing.”

“By the time the last pixel comes in on an FPGA, it should have detected every possible feature location,” he says. “By the time the last pixel comes in on the GPU, then can it begin the feature detection process.”

Edgcombe also predicts the FPGA will use less power than the GPU.

“This is because FPGAs are often built for lower power solutions while GPUs tend to be built for high performance computer systems where power is not as much of a concern,” he says.

Only memory resource usage was compared for both systems. BRAMs and 32-bit registers were used for the FPGA metric while the number of full resolution frame buffers were used for the GPU metric. Only the accelerated SURF feature detection algorithm was analyzed and was broken down into the integral image, filtering, and non-maximal value suppression portions of the pipeline.

Additionally, the amount of memory resources used were compared to the total amount of resources available on both devices. How each system handles scaling of image resolutions with respect to additional memory resources required was also considered.

With regards to memory resource utilization:

With regards to latency:

With regards to power estimation:

This project focuses on a single object detection algorithm, but one that plays a large part in enabling the spatial and navigational awareness necessary in Advanced Driver Assisted Systems (ADAS), and other solutions that require object detection for mission-critical responsiveness.

Edgcombe first came to DornerWorks as an intern/co-op student for the FPGA engineering group in 2019. He became a full-time FPGA engineer at DornerWorks in 2021 and is now contributing to other complex projects using the skills he’s learned at GVSU and through real-life applications. Edgcombe’s first project at DornerWorks involved the test, verification and development of the chip-to-chip interface for radiation-hardened FPGAs used in a solution that enables Ethernet networked systems in space. He now leads that area of the project, helping better the world through engineering.

“The accelerated growth I get by working on significant projects with highly experienced engineers has been the most valuable and enjoyable aspect of working at DornerWorks,” he says.

“I am always striving to learn and grow and the team I’m working with has greatly accelerated that. Not to mention the work we do is very significant.”

If you are a student looking to start your career in embedded engineering, visit our careers page to find an opportunity that suits your goals.