Not too long ago, the path from algorithm to fielded machine learning (ML) model was a long and complex undertaking. Those with access to a ML expert with a working knowledge of neural network deployment might have options, but they were time consuming to develop.

AMD has shortened that path with the Vitis unified software platform and the recent release of the Kria™ System-on-Module (SOM). DornerWorks is a Kria SOM ecosystem partner and is helping organizations deploy their ML algorithms and IP on this game changing platform.

DornerWorks, a AMD Premier Partner, is a design services partner with AMD for the launch of the new Kria SOM portfolio. Developers working on ML/AI projects, embedded devices, and system architecture can utilize DornerWorks algorithm integration expertise to accelerate development of their products with Kria SOM innovative hardware and software features.

DornerWorks engineer Shawn Barber is developing a Kria SOM machine learning demo by deploying an object detection algorithm that locates and measures the distance between people’s eyes. Other applications of the Kria some include industrial inspection, search and rescue, safety monitoring in transportation, and autonomous systems.

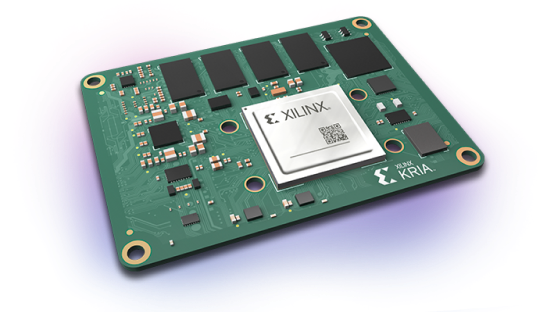

The Kria K26 SOM is built on top of the Zynq® UltraScale+™ MPSoC architecture, which features a quad-core Arm® Cortex™ A53 processor, more than 250K logic cells, and a H.264/265 video codec. The SOM also features 4GB of DDR4 memory and 245 IOs that allow it to adapt to virtually any sensor or interface, ideal for devices built to be connected to the Internet of Things (IoT). This flexibility is one of the most compelling features of the Kria SOM.

Barber says the AI detection engine and other components running within an FPGA allows developers the option to adapt compute elements as needed. “If you have a very confined set of criteria, you can shrink that FPGA footprint down or grow it if you want more performance. Plus, it gives you the additional flexibility of tying it on to other peripherals like cameras.”

With 1.4 tera-ops of AI compute, the Kria K26 SOM enables developers to create vision AI applications offering more than 3X higher performance at lower latency and power compared to GPU-based SOMs, critical for smart vision applications including security cameras, city cameras, traffic cameras, retail analytics, machine vision, and vision guided robotics.

For information on DornerWorks’ AMD design services, visit our AMD Alliance Member profile page.

When you’re ready to supercharge your machine learning algorithms with the acceleration features of the Kria SOM, schedule a meeting with our team. We’re here to help you turn your ideas into reality.